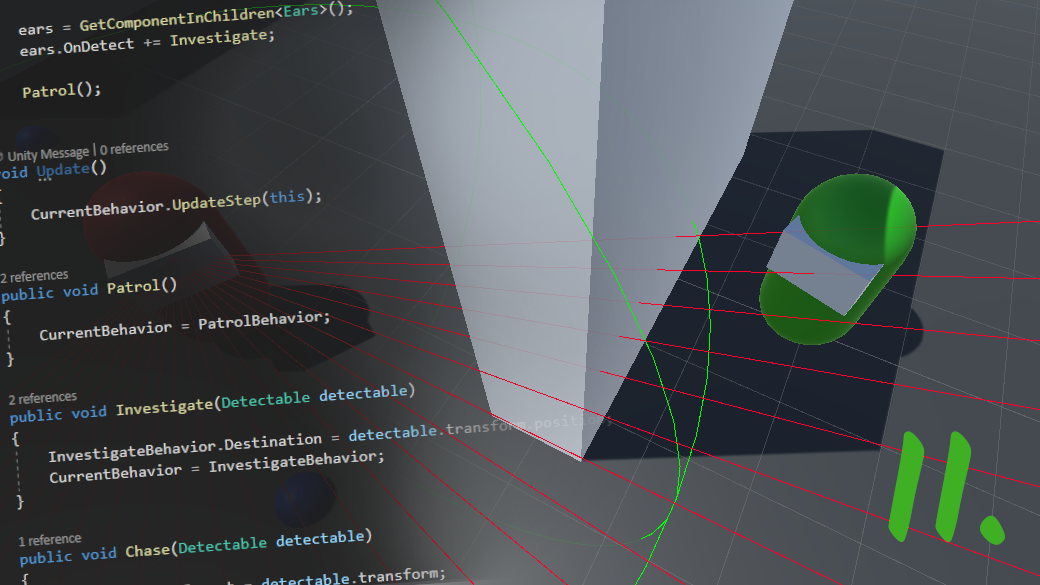

In the previous part, we've seen how to implement senses like ears and eyes for an NPC. We've also seen how useful inheritance can be and learned a bit about in-game development ubiquitous vector math.

Today, I'm going to guide you through an implementation of AI behavior for patrolling between points, chasing a player when it's seen, and investigating a location the player has been heard on.

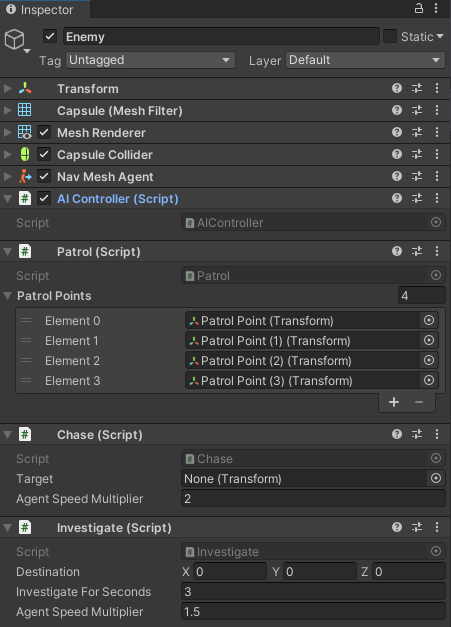

The implementation consists of AIController class, that governs a Unity NavMeshAgent according to a currently selected behavior. Concrete behaviors, Patrol, Chase and Investigate, are derived from an AIBehavior base class and AIController has an instance of each one of them.

The crucial member of base type AIBehavior for the current behavior in AIController is only one and the AIController can be executing one and only one behavior at a time, so from that point of view, it can only be in one state. Hence, what we have here is a State pattern where behaviors represent the states.

AIController contains also methods that are subscribed to UnityActions OnDetect and OnLost in our senses from the previous part.

When, for example, Eyes detects a player, the method to set the current behavior in AIController to Chase is called and a reference to the player's Detectable component is passed as action argument. That reference is subsequently also passed to Chase behavior where it is used to set the target (the player) which ought to be chased.

Here we have yet another, probably the most common, behavioral design pattern, the Observer, sometimes also called Publish-Subscribe pattern.

Last thing before we dive into the implementation of AIController. If you haven't done it already in the previous part, I encourage you to get the final example from GitHub and open it in Unity 2020.3.17f1, so you can see the code I'm going to describe in its full context.

AIController

As mentioned in the introduction, the AIController governs NavMeshAgent, so there needs to be a using statement for the UnityEngine.AI namespace. We also, once again, use the RequireComponent attribute, to specify our AIController class depends on NavMeshAgent and also concrete implementations of behaviors.

using UnityEngine;

using UnityEngine.AI;

[RequireComponent(typeof(NavMeshAgent))]

[RequireComponent(typeof(Patrol))]

[RequireComponent(typeof(Investigate))]

[RequireComponent(typeof(Chase))]

public class AIController : MonoBehaviour

{

private AIBehaviour currentBehavior;

public AIBehaviour CurrentBehavior

{

get => currentBehavior;

private set

{

currentBehavior?.Deactivate(this);

value.Activate(this);

currentBehavior = value;

}

}

private Patrol PatrolBehavior;

private Investigate InvestigateBehavior;

private Chase ChaseBehavior;

private NavMeshAgent agent;

...Before moving to the next lines of AIController class, notice how we call Deactivate in the setter of CurrentBehavior property and then we call Activate on a new value, passing reference to this AIController in both cases, right before we assign it to the currentBehavior member. Why we do it like that, will be obvious soon.

And one last thing to this part of code. In this simple example, we have concrete behaviors as exclusive components of an Enemy. However, there's also a slightly more advanced approach, that has its pros and cons, and that's having a pool of behaviors for Enemies to "borrow" these behaviors from. However, I'm not going into further detail about it now, so let's move.

We also want to expose some members and methods of NavMeshAgent, cache the agent's initial speed, and prepare some useful one-line functions, for governing the agent from AIController, without exposing the agent itself.

...

public float RemainingDistance { get => agent.remainingDistance; }

public float StoppingDistance { get => agent.stoppingDistance; }

public void SetDestination(Vector3 destination) => agent.SetDestination(destination);

private float defaultAgentSpeed;

public void MultiplySpeed(float factor) => agent.speed = defaultAgentSpeed * factor;

public void SetDefaultSpeed() => agent.speed = defaultAgentSpeed;

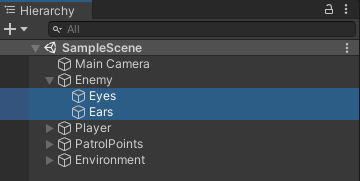

...A similar approach we have in terms of Eyes and Ears. There's only a small difference, none of them are components of Enemy game object itself, but both have their own game objects, that are children of the Enemy.

...

private Eyes eyes;

private Ears ears;

public void IgnoreEars(bool ignore) => ears.gameObject.SetActive(!ignore);

...

Now we're getting to the Start method, where we assign references to components our AIController class depends on, using GetComponent and GetComponetInChildren methods, subscribe methods to OnDetect and OnLost event of our eyes and ears, and call Patrol, where current behavior is set to patrolling.

...

void Start()

{

agent = GetComponent<NavMeshAgent>();

defaultAgentSpeed = agent.speed;

PatrolBehavior = GetComponent<Patrol>();

InvestigateBehavior = GetComponent<Investigate>();

ChaseBehavior = GetComponent<Chase>();

eyes = GetComponentInChildren<Eyes>();

eyes.OnDetect += Chase;

eyes.OnLost += Investigate;

ears = GetComponentInChildren<Ears>();

ears.OnDetect += Investigate;

Patrol();

}

...The update function is super simple, we only call UpdateStep on the CurrentBehavior and once again passing a reference to this AIController.

...

void Update()

{

CurrentBehavior.UpdateStep(this);

}

...The rest of the AIController class are methods for switching between behaviors. Two of them, Investigate and Chase, are subscribed to OnDetect and OnLost events, as we've seen in the Start method.

...

public void Patrol()

{

CurrentBehavior = PatrolBehavior;

}

public void Investigate(Detectable detectable)

{

InvestigateBehavior.Destination = detectable.transform.position;

CurrentBehavior = InvestigateBehavior;

}

public void Chase(Detectable detectable)

{

ChaseBehavior.Target = detectable.transform;

CurrentBehavior = ChaseBehavior;

}

}Behaviors

As we've seen in the previous section. AIController has the CurrentBehavior property of type AIBehavior. AIBehavior is the base class for all other, concrete behaviors.

using UnityEngine;

public abstract class AIBehaviour : MonoBehaviour

{

public virtual void Activate(AIController aIController) { }

public abstract void UpdateStep(AIController aIController);

public virtual void Deactivate(AIController aIController) { }

}

The CurrentBehavior in AIController never references a direct instance of AIBehavior, it's always one of its child classes, Patrol, Investigate, or Chase. Each of them each provides a different logic for UpdateStep, which is mandatory, and optionally provide logic for Activate and Deactivate methods.

What we here take advantage of is called Polymorphism, a fancy name for a fairly simple concept. We could've used an interface instead of an abstract class. However, when a class implements an interface, all methods declared in the interface must be implemented.

Since not all behaviors, as we'll see in the following sub-sections, need to provide logic for Activate and Deactivate methods, with interfaces we'd end up with the same empty methods in multiple behaviors.

Plus, with an abstract base class like this, we see just by looking at AIBehavior that only the UpdateStep is mandatory to be implemented in child classes, while Activate and Deactivate aren't.

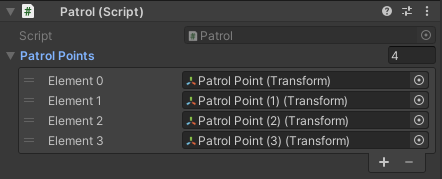

Patrol

using UnityEngine;

public class Patrol : AIBehaviour

{

public Transform[] PatrolPoints;

private int currentPPIndex;

public override void Activate(AIController controller)

{

controller.SetDefaultSpeed();

controller.SetDestination(PatrolPoints[currentPPIndex].position);

}

public override void UpdateStep(AIController controller)

{

if (controller.RemainingDistance <= controller.StoppingDistance) {

currentPPIndex = currentPPIndex < PatrolPoints.Length - 1 ? currentPPIndex + 1 : 0;

controller.SetDestination(PatrolPoints[currentPPIndex].position);

}

}

}

In Activate method of Patrol behavior, we set the default speed (remember how we cache agent's initial speed in AIController?) and the patrol point as agent's destination, which is initially the element zero from PatrolPoints array of Transform components.

In Update, when the agent reaches its destination (or is close enough, to be precise) the next element of PatrolPoints array is selected as the next target, or the first again, if the currently selected target is the last element.

Investigate

using UnityEngine;

public class Investigate : AIBehaviour

{

public Vector3 Destination;

public int InvestigateForSeconds = 3;

public float AgentSpeedMultiplier = 1.5f;

private bool isInvestigating;

private float investigationStartTime;

public override void Activate(AIController controller)

{

controller.MultiplySpeed(AgentSpeedMultiplier);

controller.SetDestination(Destination);

}

public override void UpdateStep(AIController controller)

{

if (controller.RemainingDistance <= controller.StoppingDistance && !isInvestigating)

{

isInvestigating = true;

investigationStartTime = Time.time;

}

if (isInvestigating && Time.time > investigationStartTime + InvestigateForSeconds)

{

isInvestigating = false;

controller.Patrol();

}

}

public override void Deactivate(AIController aIController)

{

isInvestigating = false;

}

}

Investigate is a little bit more complex, but not much. We can see it has Vector3 Destination, which is a location in the scene that is set from AIController in Investigate method as the position of the detected Detectable (attached to our Player).

In Activate we increase the agent's speed, so it starts moving to a position where the player was heard (when OnDetect event on Ears was invoked) a bit faster and set the target destination of the agent to the abovementioned location.

In UpdateStep, when the agent reaches the destination we flip the isInvestigating flag to true and set investigationStartTime to the current time (Time.time is the time in seconds since the start of the application).

In the next call of UpdateStep, which is in the next frame, since it's called from AIController in the Update method, the first condition will be false and the second will be true when the current time exceeds the time when destination was reached plus 3 seconds.

In Deactivate, we want to set isInvestigating back to false, to have this behavior in its initial state when it's activated next time.

Chase

using UnityEngine;

public class Chase : AIBehaviour

{

public Transform Target;

public float AgentSpeedMultiplier = 2f;

public override void Activate(AIController controller)

{

controller.MultiplySpeed(AgentSpeedMultiplier);

controller.IgnoreEars(true);

}

public override void UpdateStep(AIController controller)

{

controller.SetDestination(Target.position);

}

public override void Deactivate(AIController controller)

{

controller.IgnoreEars(false);

}

}

As you can see, the Chase behavior is the simplest one. All we do in Activate is setting the agent's speed so it rather chases our player, instead of walking towards it at the same speed as when patrolling around.

We also turn off the Ears component, because when Enemy sees a player, it doesn't care anymore whether it's heard or not, and we also don't want OnDetect and OnLost events on Ears to interfere with the Chase behavior.

In UpdateStep we keep setting the agent's destinati0n to the player's current position. The Target is already set to our player's Transform component in the Chase method in AIController.

And finally, in Deactivate method, we just turn the Ears component on again.

Conclusion

This is the end of two parts mini-series about AI senses. In the previous part, we've seen how to implement senses like ears and eyes and in today's part, we've dived into the implementation of reactive behaviors.

We've also taken advantage of two common behavioral design patterns, the State pattern, and the Observer pattern and seen in practice a bit of vector math.

I hope you like it and bear in mind, this is just one and rather a simple solution. AI programming is a broad and very interesting topic with lots of deep rabbit holes. However, in a small scope game made with Unity, you can pretty much get away with this.